Here is the math..

Thursday, November 10, 2011

It pays to go big in the cloud..

Here is the math..

Monday, August 17, 2009

Sunday, January 14, 2007

Reducing bottlenecks in the JEE stack - Network Overhead: Part 1.

My motivation to write this article came from a recent visit I made to one of our customers. They have a distributed JEE middleware layer which uses protocols like JMS and RMI to move data across multiple JVMs running on multiple commodity hardware boxes. The JMS layer is used for sending asynchronous messages whereas the RMI layer is used for sending broadcast messages to multiple consumers. During very high usage, the network pipe gets extremely busy (up to 70%). This is a gigabit network we are talking about. Obviously, there was a concern of the network getting completely clogged at the rate at which the usage was growing. In fact, the network would get so busy at peak usage times, that they had to introduce artificial pauses and they couldn't afford to send real time messages any more. This is the reason I was there to demonstrate how Terracotta could reduce this overhead significantly. We dicided to replace the RMI-boradcast layer with Terracotta DSO as a pilot. Let's look at how RMI and Terracotta would work in this scenario to understand the test.

A normal RMI invocation works as follows: a client application invokes a method on an object on the server. The parameters are marshalled and sent out over the network to the server machine, where the computations are performed, results are marshalled and sent back to the client. The two main areas where delays occur are the marshalling (object serialization and deserialization) and the network overheads. Object serialization overhead is a lot higher if you are sending deep object graphs as parameters since a deep-clone serialization (and deserialization) needs to be performed. In the above mentioned case, each consumer JVM ran a RMI server and the client JVM (the broadcaster) would iterate through a list of consumer IPs or hostnames and send a message (invoke a method) on each of the consumer JVMs.

Terracotta is an open source clustering solution that works as follows: each clustered JVM is policed or managed by a central Terracotta server. Whenever a shared object is mutated (within the boundaries of a lock), the delta level fine grained (field level) changes are maintained, and propagated to other clustered JVMs if and when the same object is accessed. Since there is no serialization and deserialization involved, there is no marshalling and unmarshalling overhead - no matter how deep the objec graph is. Also, since Terracotta only ships fine-grained changes, the difference in the amount of data sent across the network can be huge depending on the case. All this is possible with Terracotta as it maintains physical object identity across the cluster (i.e. foo == bar is preserved, not just foo.equals(bar)).

Ok, all this is in theory. Let's look at the actual test now. To simulate the customer environment, I wrote a simple RMI application with 1 broadcaster node broadcasting to 3 consumer nodes an object graph which is 10 levels deep and is of total size 10k. For this result, the boradcast happened every 10 millisenconds and I used ntop to monitor network usage.

Results:

The RMI broadcast sent a total of 2.6 GigaBytes of data over one hour i.e. ~ 722 KiloBytes per second.

With Terrcotta, the overall network usage over one hour was 491 MegaBytes i.e. ~ 136 KiloBytes per second.

This is a 5.3 times improvement by using the Terracotta solution. Now if you scale these results to 40 consumers and 4 broadcasters (actual real world scenario) - the improvement is ~ 11 times.

Let's analyze these results. In this case, Terracotta is able to achieve such improvements because in the entire 10K object graph which is 10 levels deep, only one String value (100 bytes) is changing and being broadcasted. With the RMI solution, the entire 10K object graph is being broadcasted to every consumer node whereas Terracotta only sends the bytes that have changed.

If we consider a non-broadcast use case where the changes do not need to be sent to every other node in the cluster, Terracotta would do a lot better whereas a serialization based clustering solution would still send the entire object graph to every other node to keep the cluster coherent. (There are some serialization based clustering solutions that implement a buddy replication system or some other mechanism where the object graph is not shipped to every other node, but the drawbacks associated with those is outside the scope here.) Terracotta guarantees cluster coherency whithout having to send the changes to every peer in the cluster because the central server knows which object is being accessed on which node through the lock semantics and by preserving object identity. In fact, due to the object identity being preserved, Terracotta sends fine grained delta-level changes ONLY WHERE they need to be sent i.e. only to the JVM where the same object is accessed.

All of the above improvements are over and above not having to maintain any RMI code and working with natural POJOs only. I have not pointed out the performance gains in the above test seen as a result of no serialization and not having to marshall and unmarshall the 10K, 10 level deep object graph as that is outside the scope of this discussion. I wanted to point out the impact network overhead can have on a typical JEE application.

For those who are interested, in the next part of this article I will post the source code of the test and instructions on how to run it.

Links:

ntop

RMI

Terracotta

Download ntop.

Download Terracotta.

Tuesday, December 05, 2006

Terracotta is now open source

Links:

http://www.terracotta.org

Sunday, November 05, 2006

Clustering - EJBs vs JMS vs POJOs

I am going to start this article by outlining my experience as a server lead for a major services company for more than 3 years, focusing mainly on the major pain points we faced in an effort to cluster our application in a cost effective manner. The experience was a hair-losing and maddeningly frustrating one.

When I started work, it was very obvious to me that there needed to be an effort to redesign the existing architecture of the system. It was a typical consumer facing application serving web and mobile clients. The entire application was written in Java (JSE and JEE) in a typical 3-tier architecture topology. The application was deployed on 2 application server nodes. All transient and persistent data was pushed into the Database to address high availability. As it turned out, as the user-base grew, the database was the major bottleneck towards RAS and performance. We had a couple of options: pay a major database vendor an astronomical sum to buy their clustering solution, or redesign the architecture to be a high-performing RAS system. Choosing the first option was tempting, but it just meant we were pushing the real shortcomings of our architecture "under the carpet", over and above having to spend an as

trnomical sum. We chose the latter.

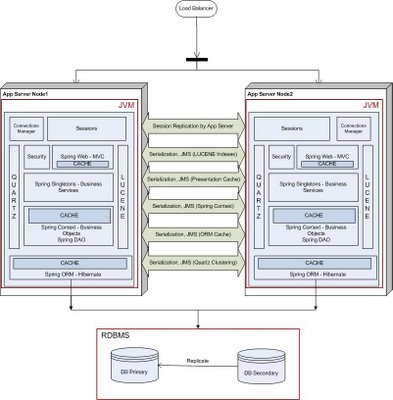

trnomical sum. We chose the latter.In the next evolution of the application, we made a clear demarcation between the transient and persistent data, and used the database to store only the "System of Record" information. To distribute all the transient information (sessions, caches etc.), we took the EJB+JNDI route (this was in 2001-2002). This is what our deployment looked like. Some of the known shortcomings of EJBs are that they are too heavy-weight and make you rely on a heavy weight container. EJB3 has somewhat changed that. In terms of clustering, we found the major pain point to be the JNDI discovery.

If you implement an independent JNDI tree for each node in the cluster, the failover is developer's responsibility. This is beacause the remote proxies retrieved through a JNDI call are pinned to the local server only. If a method call to an EJB fails, the developer has to write extra code to connect to a dispatcher service, obtain another active server address, and make another call. This means extra latency.

If you have a central JNDI tree, retrieving a reference to an EJB is a two step process: first look up a home object (Interoperable Object Reference or IOR) from a name server and second pick the first server location from the several pointed by the IOR to get the home and the remote. If the first call fails, the stub needs to fetch another home or remote from the next server in the IOR and so on. This means that the name servers become a single point of failure. Also, adding another name server means every node in the cluster needs to bind its objects to this new server.

As a result, over the next three years, we moved on from EJBs and adopted

the Spring Framework in our technology stack, along with a bunch of open source frameworks: Lucene for indexing our search, Hibernate as our ORM layer, role based security, Quartz for scheduling. We also dumped our proprietary Application Server for an open source counterpart. We wrote a JMS layer to keep the various blocks in the technology stack coherent across the cluster. To distribute the user session, we relied upon our application server's session replication capabilities. This is what our deployment looked like in mid 2006.

the Spring Framework in our technology stack, along with a bunch of open source frameworks: Lucene for indexing our search, Hibernate as our ORM layer, role based security, Quartz for scheduling. We also dumped our proprietary Application Server for an open source counterpart. We wrote a JMS layer to keep the various blocks in the technology stack coherent across the cluster. To distribute the user session, we relied upon our application server's session replication capabilities. This is what our deployment looked like in mid 2006.As you can see, our architecture had evolved into a stack of open source frameworks, with many different moving parts. The distribution of shared data happened through the JMS layer. The main pain points here were the overheads of serialization and maintaining the JMS layer.

The application developer had to make sure that all of these blocks were coherent across the cluster. The developer had to define points at which changes to objects were shipped across to the other node in the cluster. Getting this right was a delicate balancing act, as shipping these changes entailed serializing the relative object graph on the local disk and shipping the entire object graph across the wire. If you do this too often, the performance of the entire system will suffer, while if you do this too seldom, the business will be affected.

This turned out to be quite a maintenance overhead. Dev cycles were taking longer and longer as we were spending a lot of time maintaining the JMS layer. Adding any feature meant we had to make sure that the cluster coherency wasn't broken.

This turned out to be quite a maintenance overhead. Dev cycles were taking longer and longer as we were spending a lot of time maintaining the JMS layer. Adding any feature meant we had to make sure that the cluster coherency wasn't broken.Then came the time when we needed add another node into our cluster to handle increased user traffic. This is what our deployment looked like. With each additional node in the cluster, we had to tweak how often the changes were shipped across the network in order to get optimum throughtput and latency and so as not to saturate the network. The application server session replication had its own problems with regards to performance and maintenance.

The irony is that every block in our technology stack was written in pure JAVA as POJOs, and yet there was a significant overhead to distribute and maintain the state of these POJOs across multiple JVMs. One can argue that we could have taken the route of clustering our database layer. I will still argue that doing so would have pushed the problems in our architecture under the DB abstraction, which would have surfaced later as our usage grew.

I have now been working at Terracotta for a while and have a new perspective from which to

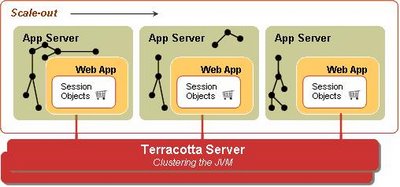

look at the above problem. Terracotta offers a clustering solution at the JVM level and becomes a single infrastructure level solution for your entire technology stack. If we had access to Terracotta, this is what our deployment would have looked like. You would still use the database to store the "System of Record" information, but only that.

look at the above problem. Terracotta offers a clustering solution at the JVM level and becomes a single infrastructure level solution for your entire technology stack. If we had access to Terracotta, this is what our deployment would have looked like. You would still use the database to store the "System of Record" information, but only that.Terracotta allows you to write your apps as plain POJOs, and declaratively distributes these POJOs across the cluster. All you have to do is pick and choose what needs to be shared in your technology stack and make such declarations in the Terracotta XML configuration file. You just have to declare the top level object (e.g. a HashMap instance), and Terracotta figures out at runtime the entire object graph held within the top level shared object. Terracotta maintains the cluter-wide object identity at runtime. This means obj1 == obj2 will not break across the cluster. All you need to do in the app is get() and mutate, without an explicit put(). Terracotta guarantees that the cluster state is always coherent and lets you spend your time writing business logic.

Since Terracotta shares the state of POJOs at the JVM level, it is able to figure out what has changed at the byte level at runtime, and only ship the deltas across to other nodes, only when needed. The main drawback of database/filesystem session persistence centers around limited scalability when storing large or numerous objects in the HttpSession. Every time a user adds an object to the HttpSession, all of the objects in the session are serialized and written to a database or shared filesystem. Most application servers that utilize database session persistence advocate minimal use of the HttpSession to store objects, but this limits your Web application's architecture and design, especially if you are using the HttpSession to store cached user data. With Terracotta, you can store as much data in the HttpSession as is required by your app, as Terracotta will only ship across the object-level fine grained changes, not all the objects in the HttpSession. Also, Terracotta will do this only when this shared object in the HttpSession is accessed on another node (on demand), as Terracotta maintains the Object Identity across the cluster. Here are some benchmarking results.

When I looked closely at the Terracotta clustering technology after I started working here, I couldn't help but look back at those 3+ years I spent with my team trying to tackle everyday problems associated with clustering a Java application.

All the pain points I mentioned above are turned into gain points by Terracotta: no serialization, cluster-wide object identity, fine grained sharing of data and high performance.

Bottom Line: Terracotta is the only technology available today that lets you distribute POJOs as-is. Introducing Terracotta in your technology stack lets you spend your development resources on building your business application.

Links:

Terracotta web site.

Download Terracotta.

Tuesday, October 10, 2006

Clustering web applications on Tomcat - Part 4

Maintenance and Reliability

Tomcat:

Given that Tomcat shares the clustered state among all participating nodes, it is not unexpected that this architecture introduces bottlenecks at some point in the cluster. The overall throughput of the system drops as a function of the number of nodes added into the cluster. From the operations point of view, this can be a considerable overhead as IT cannot add capacity on demand.

Tomcat also supports a couple of persistent mechanisms which write the sessions on to disk or into a Database through JDBC. The caveat here is that the control over when these sessions are written to the persistent store is not very robust, and hence there can be some data loss and/or data integrity can be compromised.

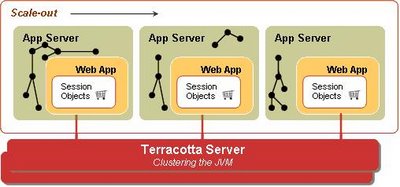

Terracotta functions as a dedicated state server and plugs into the JVM memory model to provide a highly transparent and efficient clustering implementation to resolve typical bottlenecks, as illustrated by the following diagram.

The Terracotta solution provides a persistent mode, where multiple instances of the Terracotta server share data through a shared disk space (e.g. SAN). This makes the solution more robust as it eliminates any single point of failure in the system.

A very interesting and powerful side-effect of the Terracotta solution is that the application is no longer constrained by the JVM heap size and has "virtual" memory available on demand. Typical 32 bit architectures allocate a maximum of 1.5-2 GB (approx.) heap to the JVM. If your application grows beyond this limit, the JVM will spend most of its time garbage collecting (very high performance hit) and will eventually throw an OOME (OutOfMemoryException). In the Terracotta world, the LRU (least recently used) objects are flushed from the local (client) cache to the Terracotta server, and the LRU objects on the server are flushed onto disk. Terracotta gives you "Network Attached RAM"; no more annoying OOMEs.

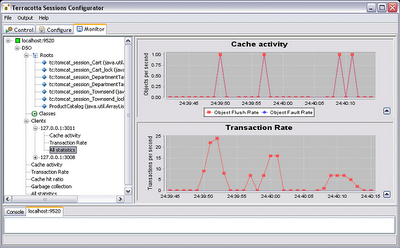

As mentioned before, Terracotta also ships with a very useful Admin Console Tool, which allows you to look at the shared sessions and the objects within the session at run time. From the operations perspective, it allows you to monitor the system cache activity, transaction rate and the cache hit ratio as shown in the following screenshots.

Conclusion

Pairing Tomcat with Terracotta delivers a very unique and powerful linear-scale and high-performance HTTP Session clustering solution. Your existing Tomcat environment becomes very powerful and scalable by introducing Terracotta Sessions into the picture. Terracotta allows you to use clustering as an infrastructure-level service by not introducing any clustering code in your application.

Because of its unique architecture, Terracotta delivers the following benefits:

- Linear scale-out

- Significantly reduced TCO

- High performance

- No application changes – it is not necessary to write to an API, implement serialization, or call setAttribute().

- Network efficiency – Without serialization, changes are detected and transmitted at the field level. Replication of data occurs only where necessary.

<- Part 3

Clustering web applications on Tomcat - Part 3

Performance

We performed a benchmarking exercise to highlight the performance characteristics of the two solutions. Our test consisted of the following simulated real world actions:

1. Log in to the application

2. Add several items to the shopping cart (The JPetStore application stores shopping cart in session)

3. Modify the quantity of the items in the shopping cart.

To obtain quantifiable results, Step 3 was repeated for 60,000 iterations and the resulting numbers of transactions per second were measured.

Results:

The following graphs show the performance test results for both the Terracotta Sessions and Tomcat Pooled Mode replication clustering solutions. To test linear scalability, the throughput results were obtained for one node in the cluster, not cluster wide.

In the above graph, Tomcat with Terracotta scales linearly as more nodes are added with a flat curve (red curve - no decrease in throughput). Tomcat with its "Pooled Mode Replication" clustering solution, on the other hand, does not scale linearly with a curve that decreases as more nodes are added (blue curve).

As a result, as is evident in the following graph, the overall system performance increases (Terracotta Sessions + Tomcat - red curve) as against decreasing (Tomcat Clustering - blue curve) when more nodes are added into the cluster.

<- Part 2 Part 4 ->

<- Part 2 Part 4 ->

Clustering web applications on Tomcat - Part 2

Now that we have the background, let's go ahead with the test.

For this test, we used the JPestStore application, which is a widely accepted reference implementation of a typical web application, using various components of the J2EE stack. We used the JPetStore implementation which is bundled with the The Spring Framework (v1.2.8) and can be downloaded here.

Simplicity

Tomcat:

To cluster JPetStore with Tomcat, we had to do the following:

- All the session attributes and the objects in their reference graph must implement java.io.Serializable

- Uncomment the Cluster element in server.xml

- Uncomment the Valve(ReplicationValve) element in server.xml

- If the Tomcat instances are running on the same machine, make sure the tcpListenPort attribute is unique for each instance.

- Make sure the web.xml has the

element or set at your - Make sure that jvmRoute attribute is set at your Engine

- Make sure that all nodes have the same time and sync with NTP service!

- Make sure that the loadbalancer is configured for sticky session mode.

In addition, the application had to make an explicit call to setAttribute() on the session to reduce network chattiness.

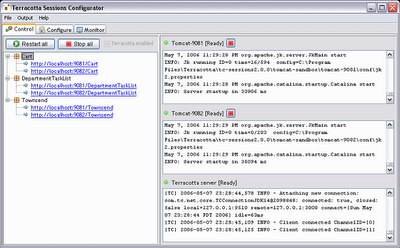

Terracotta:

With Terracotta Sessions, we were able to cluster the JPetStore application in under a few minutes, thanks to the really helpful configurator tool that is shipped with the product. The entire process involved firing up the configurator tool, importing the JPetStore war file from within the configurator and firing up the server instances. Done.

Terracotta clusters the sessions declaratively, which means we did not make any code changes or implement java.io.Serializable on session attributes. The entire process involves:

- Declaring the application name in the Terracotta configuration xml file (tc-config.xml).

- Fire up the Terracotta Server.

- Make a call to each Tomcat node in the cluster through Terracotta client script.

<- Part 1 Part 3 ->

Saturday, September 16, 2006

Clustering web applications on Tomcat - Part 1

Does your application scale with your business?

In today's world of open-source (read "free") frameworks and increasingly cheap hardware, the limit of applications is being tested more than ever before. An idea is brewed over latte and chai in the neighborhood coffee-shop, is somehow translated into boxes-and-arrows on a whiteboard, and six months later, is receiving over a million hits. So, as it turns out, the idea was really good and is on its way, as was intended. The problem is most enterprise web applications are not ready to scale and handle the requirements of large volumes, high availability and performance without some refactoring (read "high cost" and "bug-prone") or using some off-the-shelf solution or both. It is a real-world challenge for IT professionals to deliver on the promise of always-on, highly performing web applications. Also, to combat this challenge, it is widely accepted that scaling out an application (moving it from one to many physical machines) is a lot more cost affective and flexible than scaling up (increasing the capacity of the single machine).

Let's look at this problem within the context of a web application, which is deployed on multiple machines (scale-out).

In typical non-static web applications, the persistent data is stored in a database and the transient (lasting only a short time; existing briefly; temporary) data is stored in a session. A session is the duration for which the user is actively interacting with the web application. Since this data only needs to live while the user is interacting with the application, it is transient in nature. Now, persistent data can be accessed by multiple application instances from multiple physical locations, as the application communicates with the database through a network connection. This is not true for transient data. The transient session data resides on the local memory and can only be accessed by the local single application instance. Needless to say, if one of these instances goes down due to any reason, all the sessions on that instance would be lost. Hence the need for a clustering solution that will make this transient session information available to multiple nodes in a cluster, without compromising the integrity of the data.

A good clustering solution should offer the following:

1. Simple. It should be as simple as possible, but not simpler.

2. Fast. It should be high-performing and hence linearly scalable (within the constraints of the network speed and bandwidth). In other words, moving an application from one to many nodes should not decrease the overall system performance.

3. Reliable. It should be easy to maintain and dependable.

In this article, we will highlight a couple of solutions available today for scaling web applications deployed on the Apache Tomcat Servlet Container (Tomcat) and compare these solutions based on simplicity, performance and reliability.

Tomcat:

Tomcat is one of the most widely used Servlet Containers available today and is one of the official Reference Implementations for the Java Servlet and JavaServer Pages technologies. It allows web applications to store transient data through the HttpSession interface, which it internally stores in a HashMap. It creates a unique session for each user, and allows the application to set and get attributes to and from the session. The basic requirement of clustering any web application with Tomcat is to distribute these sessions across multiple nodes in a cluster, for which we will look at the following options:

1. The Tomcat Sessions Clustering solution shipped with Apache Tomcat of the Apache Software Foundation.

2. Terracotta Sessions, which is one of the many clustering solutions offered by Terracotta, Inc.

Before we delve into the comparison, it is important to look at the underlying architecture of the two solutions and their approach towards clustering.

Tomcat Clustering:

Tomcat's homegrown clustering solution is built on a peer-to-peer architecture and replicates the session at the application level. In this approach, whenever the session changes, the entire session is serialized on disk and is sent to every other node in the cluster. The developer must be careful to call setAttribute() on the session to make sure the important changes are propagated across the cluster. How often and where the developer calls setAttribute() can be a little tricky (if you forget to call it, you won't know it's broken until a failover happens; if you call it too often, you have potential production performance-degradation on your hands that might take days or weeks to fix, provided you can find the root-cause).

Terracotta Sessions:

Terracotta Sessions uses Terracotta's Distributed Shared Objects (DSO) technology which is built on a hub-and-spoke architecture. This technology takes a radical approach of clustering at the JVM level, which has some powerful advantages. The solution drills down at the byte level and is able to figure out at run time the bytes that have changed, and only propagates those bytes. The hub-and-spoke architecture eradicates the need to send the changed bytes to every other node in the cluster. This also means that the session is clustered without writing any additional code (yes, no serialization required), which makes it very inviting with an operations perspective. Very cool.

Part 2 ->

Part 2 ->